Safety and Security of Artificial Intelligence

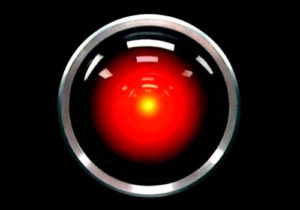

AI is rapidly emerging as an inevitable component of developing technologies. One may hardly point to any industry that is not already seeking to adopt the advancements in computational intelligence: from advertising to autonomous vehicles, from medical diagnosis to stock trading, from video games to missile defense and cyber-security, all strive to exploit AI techniques in the very near future. In the midst of this intensifying hype, a growing concern is whether AI can be trusted with the many critical tasks that are sought to be performed by smart machines? How can we ensure that our self-driving car never decides to rush its passenger off a cliff? How can we guarantee that autonomous military drones cannot be manipulated to target friendlies? Is there any way to prevent the intelligent teddy bears of the future from developing aggressive mental disorders and harming children? Can we implement a Skynet that never embarks on destroying the human race, or a post-Turing-test HAL 9000 that may never think of killing its companions?

My research aims to take the first steps in the safety and security of intelligent machines from an engineering perspective. Coming from a cyber-security background, my first task in approaching this problem is to show that there actually is a problem. With a focus on deep learning and particularly deep reinforcement learning, I investigate the potential mechanisms and vulnerabilities that give rise to unreliable functioning of intelligent systems. I am also interested in studying the emergence of undesirable behaviors in self-learning systems by adopting psychological models of “mental disorders”, and hope to extend such models to develop self-treatment mechanisms.

Dynamics and Security of Complex Networks

Any collection of interacting agents can be modeled as a network, notable examples of which are neurons in the nervous system, birds flying in flocks, competing businesses in a market, and even wars, uprisings and insurgencies. Things get more exciting when the agents of such networks are autonomous and make decisions individually. I apply techniques from various disciplines such as game theory, machine learning, control theory and thermodynamic to study and model the evolution and nonlinear dynamics of these complex formations. I am particularly interested in the topological security of networks, and methods for inference, detection and exploitation of structural vulnerabilities in social, economic, biological, and communications networks.

Security of Aerial Systems

I work on the security of airborne communications, specifically on attacks and defense mechanisms for physical and MAC layers of Flying Ad hoc Networks (FANET). I investigate countermeasures against cyber-physical attacks on UAV networks, and vulnerabilities of Traffic Collision Avoidance System (TCAS).